bagging machine learning explained

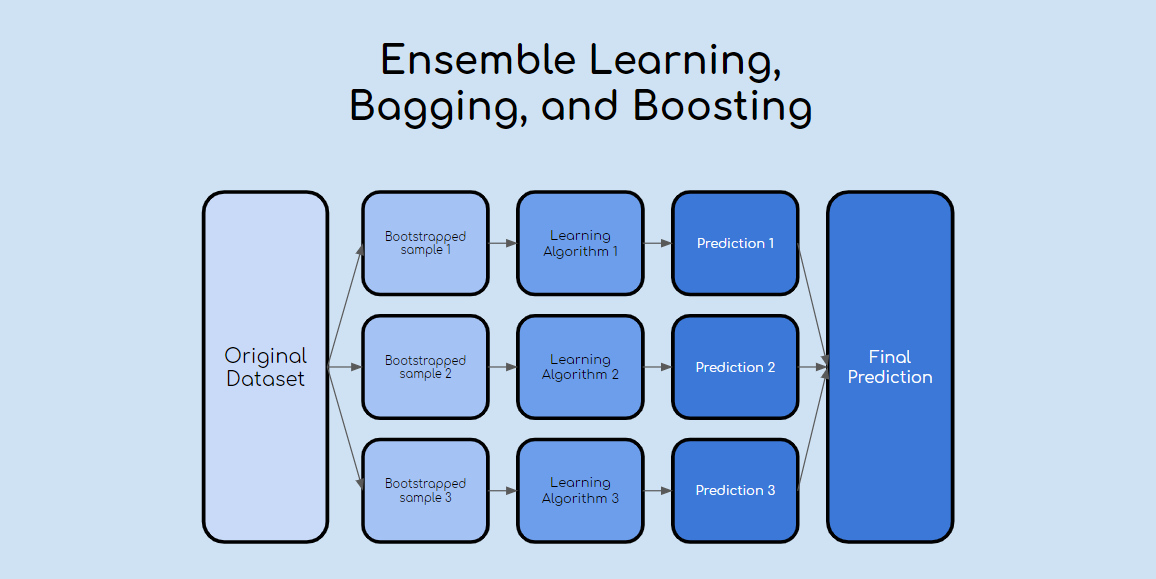

Bagging and Boosting in machine learning decrease the. Ensemble machine learning can be mainly categorized into bagging and boosting.

What Is Bagging In Machine Learning And How To Perform Bagging

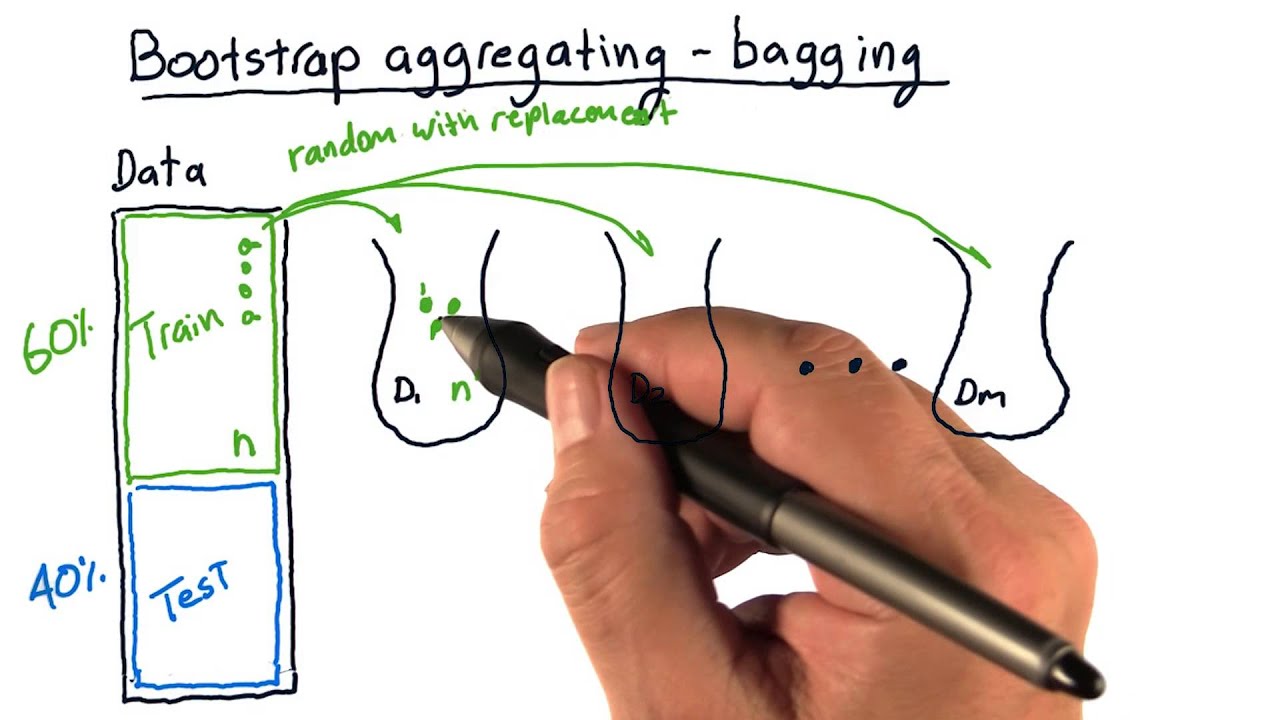

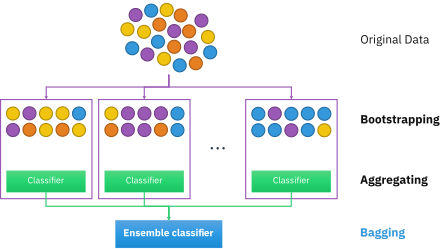

Bagging which is also known as bootstrap aggregating sits on top of the majority voting principle.

. Bagging is used typically when you want to reduce the variance while retaining the bias. The process of bagging is very simple yet often. I am unable to find an answer to this question even in some famous books.

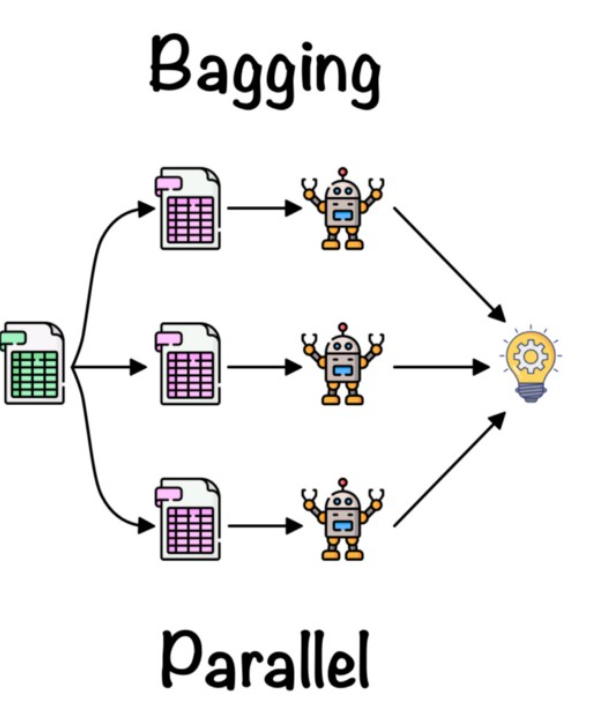

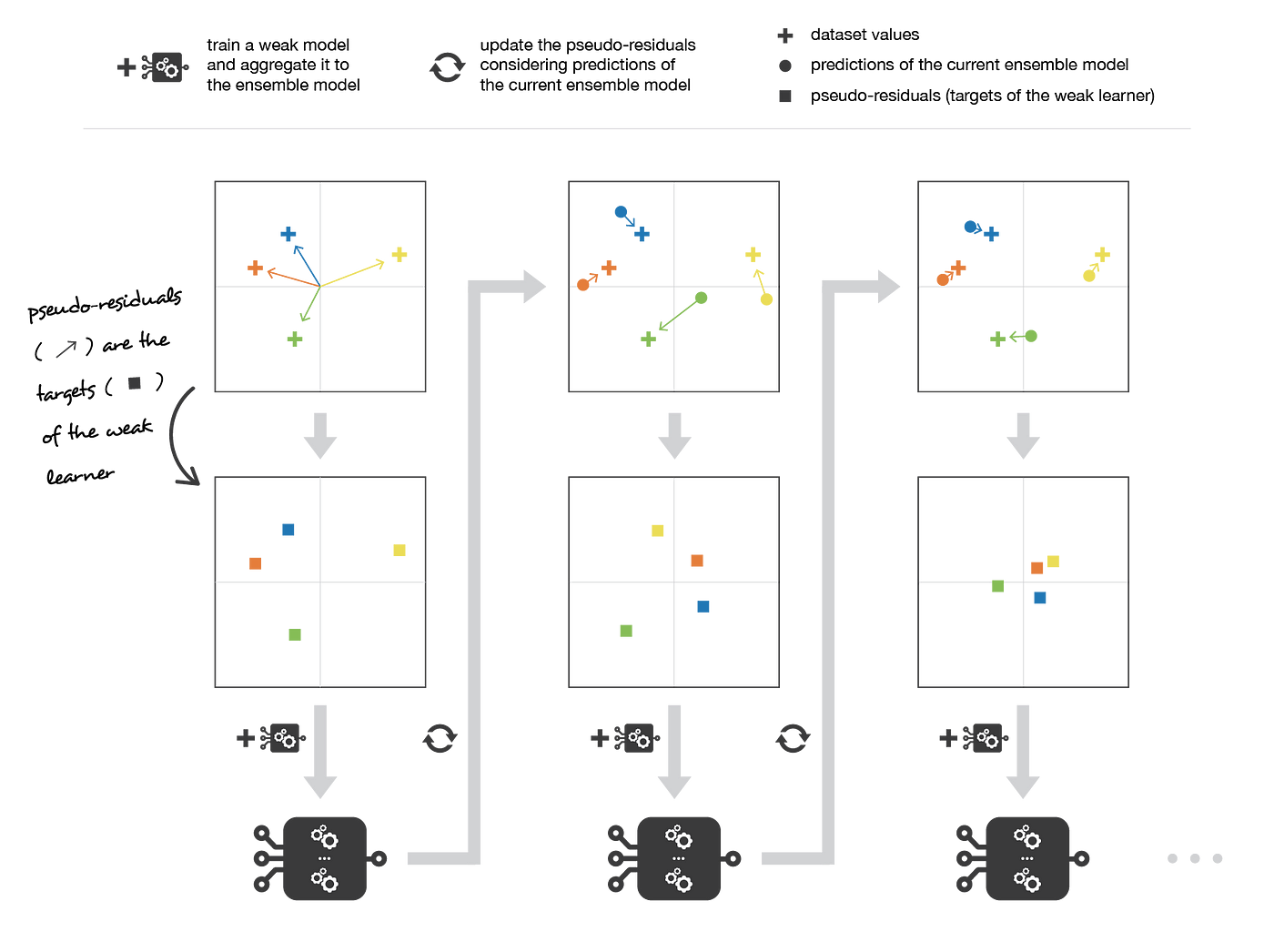

While in bagging the weak learners are trained in parallel using randomness in. The bagging technique is useful for both regression and statistical classification. Bagging which is also known as bootstrap aggregating sits on top of the majority voting principle.

It does this by taking random subsets of an original dataset with replacement and fits either a. Ad Le machine learning est une forme dIA permettant dextraire des prédictions des données. Decision trees have a lot of similarity and co-relation in their.

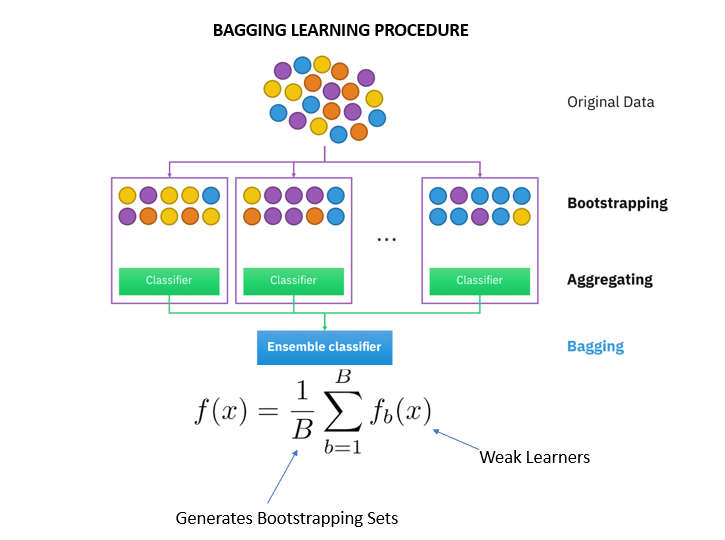

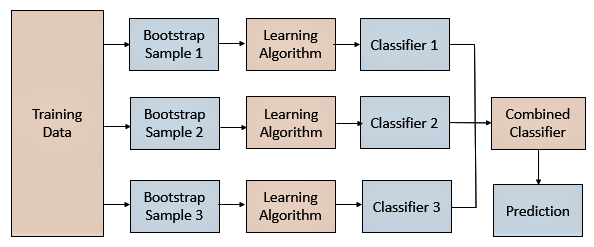

A Bagging classifier is an ensemble meta-estimator that fits base classifiers each on random subsets of the original dataset and then aggregate their individual. Bagging aims to improve the accuracy and performance of machine learning algorithms. Bagging is an acronym for Bootstrap Aggregation and is used to decrease the variance in the prediction model.

Bagging short for bootstrap aggregating combines the results of several learners trained on bootstrapped samples of the training data. Bagging is a powerful ensemble method which helps to reduce variance and by extension. Why Bagging is important.

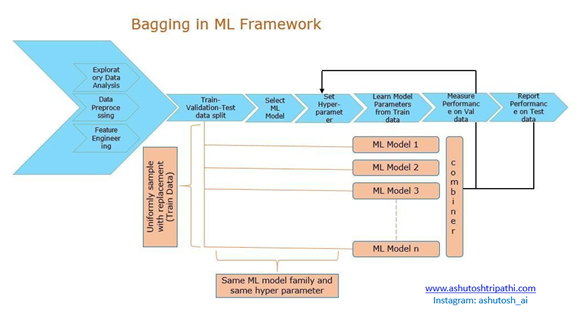

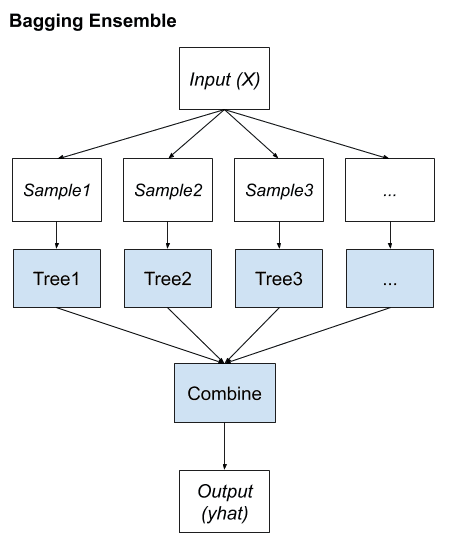

Theres no outright winner it depends on the data the simulation and the circumstances. As we have seen bagging is a technique that performs random samples with replacement to train n base learners this allows the model to be processed in parallel. What are the pitfalls with bagging algorithms.

This happens when you average the predictions in different spaces of the input. Bagging explained step by step along with its math. Get ready for your.

With minor modifications these algorithms are also known. Visitez HPE pour savoir comment le ML permet aux machines de suivre de nouveaux scénarios. Answer 1 of 16.

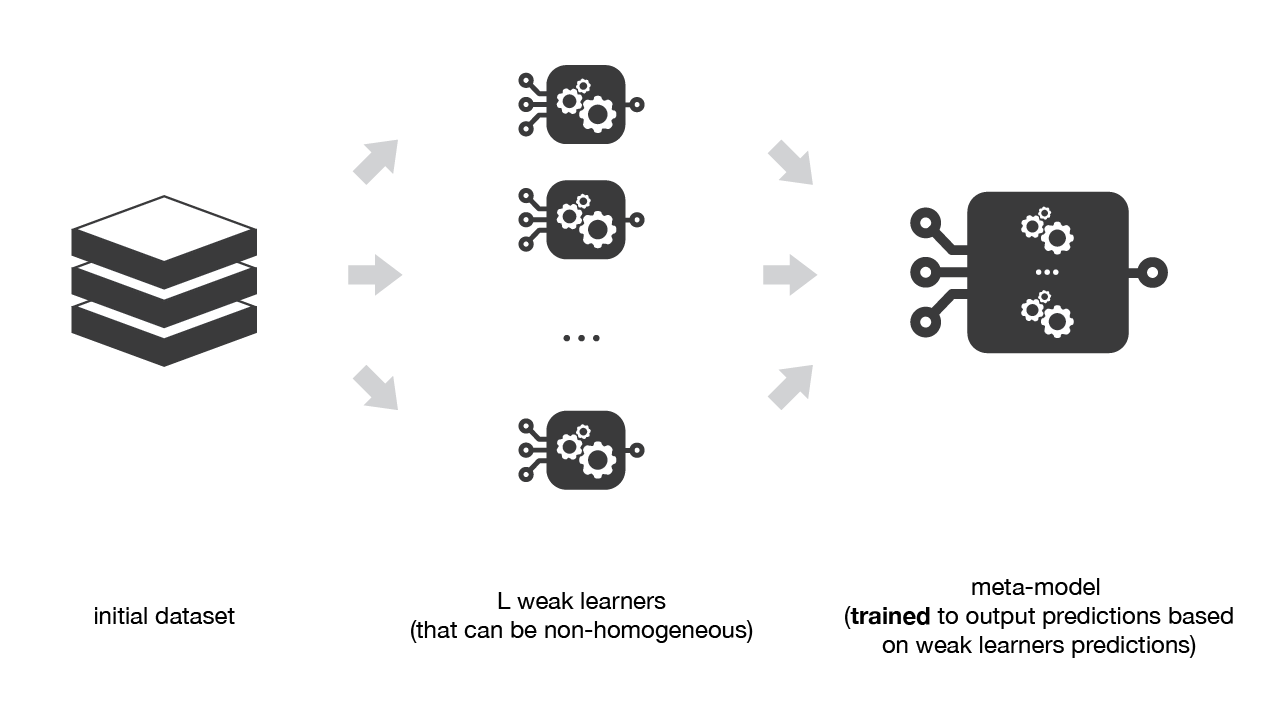

This is Ensembles Technique - Part 2. Bagging is a parallel method that fits different considered. Bagging consists in fitting several base models on different bootstrap samples and build an ensemble model that average the results of these weak learners.

The samples are bootstrapped each time when the model is trained. In this blog we will explore the Bagging algorithm and a computational more efficient variant thereof Subagging. Lets assume we have a sample dataset of 1000.

Boosting should not be confused with Bagging which is the other main family of ensemble methods. Bagging is the application of the Bootstrap procedure to a high-variance machine learning algorithm typically decision trees. B ootstrap A ggregating also known as bagging is a machine learning ensemble meta-algorithm designed to improve the stability and accuracy of machine learning algorithms.

Bagging vs Boosting. Ad Le machine learning est une forme dIA permettant dextraire des prédictions des données. Bagging is the application of Bootstrap procedure to a high variance machine Learning algorithms usually decision trees.

The bias-variance trade-off is a challenge we all face while training machine learning algorithms. The principle is very easy to understand instead. Bagging is a powerful method to improve the performance of simple models and reduce overfitting of more complex models.

Visitez HPE pour savoir comment le ML permet aux machines de suivre de nouveaux scénarios. Bagging boosting and stacking in machine learning 8 answers Closed 11 months ago. The samples are bootstrapped each time when the model is trained.

Difference Between Bagging And Random Forest Machine Learning Supervised Machine Learning Learning Problems

What Is Boosting In Machine Learning By James Thorn Towards Data Science

Ensemble Methods In Machine Learning Examples Data Analytics

Bootstrap Aggregating Wikipedia

What Is Bagging In Ensemble Learning Data Science Duniya

Ensemble Learning Explained Part 1 By Vignesh Madanan Medium

Bagging Ensemble Meta Algorithm For Reducing Variance By Ashish Patel Ml Research Lab Medium

What Is Bagging Vs Boosting In Machine Learning

Ensemble Methods In Machine Learning Bagging Versus Boosting Pluralsight

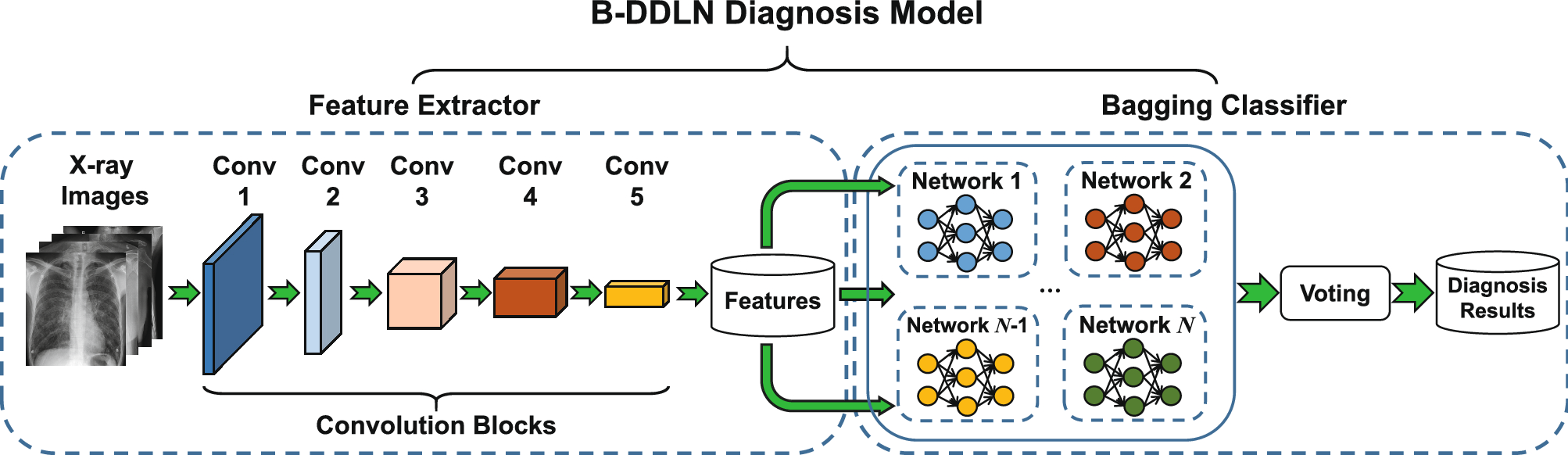

A Bagging Dynamic Deep Learning Network For Diagnosing Covid 19 Scientific Reports

Ensemble Methods Bagging Boosting And Stacking By Joseph Rocca Towards Data Science

Ensemble Learning Bagging And Boosting Explained In 3 Minutes By Terence Shin Towards Data Science

Bagging Bootstrap Aggregation Overview How It Works Advantages

Ensemble Methods Bagging Boosting And Stacking By Joseph Rocca Towards Data Science

Introduction To Random Forest In Machine Learning Engineering Education Enged Program Section

A Gentle Introduction To Ensemble Learning Algorithms

Types Of Ensemble Methods In Machine Learning By Anju Rajbangshi Towards Data Science